(Previously: Extreme D&D DIY: adventures in hypergeometry, procedural generation, and software development (part 3))

Choice of data format is critical in a project like this.

As noted in my last post, BigVTT will support map background images (as well as images for tokens, objects, etc.) in as many image file formats as permitted by the capabilities of modern browsers. PNG, JPEG, GIF, WebP—if the browser can handle it, then BigVTT should be able to do likewise.

However, the preferred map image format—and the format which will be used internally, at runtime, to represent the map and objects therein—will be SVG. There are several reasons for this, and we’ll discuss each one—but you can probably anticipate most of the advantages I’m about to list simply by recalling the acronym’s expansion: Scalable Vector Graphics.

Contents

Vector/bitmap asymmetry

Bitmap graphics and vector graphics are asymmetric in two ways:

- A vector image file can contain bitmap data, but not vice-versa.

- It is trivial to transform a vector image into a bitmap, but the reverse is much, much harder.

This fundamental asymmetry is, by itself, enough to tilt the scale very heavily in favor of using a vector file format as the basis for BigVTT’s implementation, even if there were no other advantages to doing so. (Indeed, the vector format would have to come with very large disadvantages to outweigh the asymmetry’s impact.)

Compared to what?

Let’s take a step back and ask: what exactly are the alternatives, here? That depends, of course, on what we need our data format to do; and that depends on the purpose to which it’s to be put.

For what purpose?

Well, what is this a data format for, exactly? And what do we mean to do with the data stored thus?

Map background image

The term “map” is used in multiple ways in the VTT space, so we’ll have to be careful with our language.

We can think of the totality of all the entities or “pieces” which make up the VTT’s representation of the game world as being arranged into layers.

This is necessarily a somewhat loose term. Many VTTs (e.g., Roll20) do indeed organize the content of a “scene” or “room” or “page” into “layers” (in Roll20’s case, for example, there are four: the map layer, the token layer, the GM layer, and the lighting layer), and these are often modeled, in one way or another, on the way that the concept of layers is used in vector drawing programs like Adobe Illustrator. (This is only natural; VTTs and drawing apps share many similarities of purpose, so UX design parallels are to be expected and, indeed, often desirable.) However, the way that the general concept of “layers” is instantiated varies from VTT app to VTT app (and some VTTs do not make use of layers in their design at all—to their detriment, I generally think), so we cannot rely on existing consistent, sharply drawn definitions of any of the terms and concepts we will need to use.

What I’m going to call the map background image is the “lowest” layer of the VTT’s representation. There is nothing “underneath” this layer (hence, it is necessarily opaque—has an alpha value of 100%, both in the visual sense and in any functional sense, if applicable—and either fully covers the entire mapped region or else relies on a guarantee that any gaps in this layer will be covered by specific other layers).

The content of the map background image, in terms of what it represents in the game world, depends on what sorts of things the VTT must represent and model.

For example, suppose that we are constructing a map for a dungeon. Should the map background image include the dungeon walls? Well, that depends on whether the VTT needs to model those walls in any way (e.g., to determine sight lines for fog-of-war reveal operations). In the simplest case, where the code does not need to know anything about the walls (because either there is no fog of war, or the DM will use the region reveal tools to manage the fog of war manually; and there are no token movement constraint features, etc.), the map background image layer can include the walls. Similar considerations apply to the question of whether the map background image should contain objects (such as furniture, statuary, water features, etc.), terrain elements (such as mountains), etc.

Is it better for the map background layer to be composed of a single bitmap image? Or multiple bitmaps (possibly embedded in a vector file format)? Or vector-graphical data exclusively? Does it make any difference at all?

An obvious answer might be that the map background image can just be a bitmap, because there’s no need for it to have any internal structure—in other words, it won’t contain any elements that need to be modeled. (Because any such elements would instead be contained in other, “higher” layers.) And storing a single background image, that depicts what is essentially 2D data, as a bitmap, is maximally easy.

On the other hand, there are two considerations that motivate the use of a vector format even in this case.

First, there’s the fact that vector graphics can be rendered well at any zoom level (that’s the “Scalable” part of “Scalable Vector Graphics”). This applies to map background images in particular in the form of patterns or textures; we can represent regions of the map via patterns/textures which are rendered in a scale-dependent way, rather than consisting merely of a static bitmap. This is an element of visual quality, and so at first may not seem to matter very much; but when pushing the bounds of what sorts of creations are possible (in the dimension of size, for instance, as we are doing here), what is unimportant at “ordinary” points in the distribution may become critical as we move up or down the scale. In other words: blow up a bitmapped map texture to 2x scale, and it is perhaps slightly blurry; blow it up to 200x scale, and it’s a either completely unusable mess, or a solid block of color, or something else that is no longer the thing that it should be.

But there is a second, and even more important, consideration: what if the very lowest layer of the representation nevertheless contains elements that should be modeled?

How might this happen? Well, suppose that we wish to know the terrain type of any given map region. (In a dungeon or indoor setting, this might be “floor type”.) And why would we want to know this? Because it might affect character movement rate across regions of the map.

Remember our list of requirements for BigVTT? One of them was “measurement tools”. What are we measuring? Certainly distance, if nothing else (perhaps other things like area, as well), but distance for what purpose? The two most common uses of distance measurements in D&D are (a) spell/attack ranges and (b) movement rates. When measuring distances for the purpose of calculating spell/attack ranges, there is no reason to care about terrain/floor type. But when measuring distances for the purpose of determining how far and where a character can move in any given unit of time/action, terrain considerations suddenly become very important!

Now, this doesn’t mean that we have to model terrain effects on every map we construct. But we certainly might wish to do so, in keeping with BigVTT’s design goals. And that means that either the lowest map layer will have to store region data (that is, some mapping from map coordinates to terrain metadata, the most obvious form of which is a set of geometrically defined regions—each with some metadata structures attached—which together tile the map), or else we will need to apply terrain region data as an out-of-band metadata layer on top of what is otherwise an undifferentiated (presumably bitmap-encoded) map background layer. These two approaches are essentially isomorphic; even if the visual representation of our map background image consists of some sort of bitmap data, either in one large chunk or sliced up into smaller chunks, nevertheless this layer as a whole should be capable of representing more structure than simply “a featureless grid of pixels”.

It should be noted at this point that it is possible to encode things like terrain effects (and other region metadata) in a bitmap format. After all, that’s what maps are. But programmatically extracting structured metadata from a bitmap is a much harder problem than parsing an already-structured data format. (Perhaps AI will change this, but for now the disparity remains.) And, of course, a structured format can store vastly more data than a bitmap, for the simple reason that there’s no limit to how much metadata can be contained in the former, whereas there are obvious information-theoretic limitations on what may be encoded in a bitmap (and pushing the boundaries of those limitations inevitably leads to a sharply increasing algorithmic complexity).

Fixed non-background features

Here we are referring to things like walls. (In outdoor maps, elements like cliffs, trees, etc. may also fall into this category.) These may be part of the map background image (see previous section) in a simple scenario where we don’t need to model lines of sight and constraints on token movement/position. However, as the feature list for BigVTT includes fog of war and similar functionality, we do need to be able to model this sort of thing; thus we’ll need to have some way of representing walls.

How should such things be stored and modeled? One way (used by Owlbear Rodeo, for instance) is to have a layer which acts as an alpha mask (a 1-bit mask, in Owlbear Rodeo’s case), and encodes only the specific functional properties of walls or other fixed features, while the visual representation of those features remains in the map background image. Another way, also an obvious one, is to have multiple bitmap layers (each with a 1-bit alpha mask attached); thus, e.g., layer 0 (the map background image layer) would contain the dungeon floor, while layer 1 (the fixed features layer) would contain the walls. (One interesting question: consider the spot on the map occupied by a wall in layer 1; what is at that spot in layer 0? It could be nothing—that is, a transparent gap—or it could be more dungeon floor. The former arrangement would result if we took an existing, single-layer, bitmap and sliced it up to extract the walls into a separate layer; the latter could—though not necessarily would—result if we drew the map in multiple layers to begin with. Note that these two approaches have different consequences in scenarios where fixed map features can change—see below.) And, of course, we can also store map features like this as objects (possibly with textures or bitmap background images attached) in some sort of vector or otherwise structured format.

Here a similar argument applies to that given in the previous section: we should store and model fixed non-background features in as flexible and powerful way as possible, because it allows us to properly represent and support a variety of tactical options in gameplay.

What do I mean by this? Well, consider this: can walls change?

Put that way, the answer is obvious: of course they can. A wall can simply be smashed through, for one thing (with mining tools, for instance, or adamantine weapons, or a maul of the titans). Spells like disintegrate or stone shape can be used to make a hole in a dungeon wall; passwall or phase door can be used to make passageways where none existed. And what about an ordinary door? That’s a wall when it’s closed, but an absence of wall when it’s open, right? (Of course you could say that doors—and windows, and similar apertures—should be modeled as stationary foreground features, as per the next section; but this is a fine line to walk, and in any case doesn’t account for the previous examples.)

It’s clear that (a) these scenarios all have implications for BigVTT functionality (fog of war / vision), and (b) we should ensure that such things are robustly modeled, so as to do nothing to discourage tactics of this sort (which make for some of the most fun parts of D&D gameplay). This means that walls and other fixed non-foreground features must be stored and modeled in a way that makes them easy to modify, and which allows us to represent the consequences of those modifications in no less correct and complete a way as we represent the un-modified map.

Stationary foreground objects

This is stuff like furniture, statuary, etc. It could (depending on implementation) also include things like doors (see previous section). In outdoor environments, trees could also be included in this layer; things like large boulders, likewise.

Stationary foreground objects may or may not obscure sight. They may or may not have implications for movement. (That is, it may or may not be possible to move through spaces occupied by such objects; if it is possible to move through them, they may or may not impose modifications to movement rates.)

This variability in how objects of this kind interact with movement and vision suggests, once again, that we must aim for a very flexible way of storing and modeling such things. (The emerging theme here is that our data format should do nothing, or as little as possible, to constrain our ability to model the various ways in which characters may interact with the various in-game entities represented by elements of the map. We should even strive to minimize any friction imposed on those interactions by our UI design—it should not only be possible, but easy, to represent with our map all the things that characters may do with the things that the map represents.)

It might be useful to consider one small concrete example of the sort of flexibility that we want. Suppose that the player characters are in a forest. How should we model the trees? Having them be fixed background features seems intuitively obvious (they certainly obscure vision, right?). But now suppose that one of the trees turns out to be a treant. We do not want the players to have any hint of the “tree”’s true nature until and unless it decides to make itself known, so certainly it should behave (in terms of the map representation) like any other tree. But when the treant begins to move, speak, attack, etc., it should behave like a token (see below). We do not want the DM to have to do anything tedious, annoying, or difficult to manage this transition.

(Think twice before you suggest any jury-rigged solutions! For example, suppose we say “ah, simply let the DM have a treant token prepared, either sitting in some hidden corner of the map or else stored, fully configured, in the token library [we assume such a feature to exist], to be placed on the map when the treant animates”. Fine, but what of the tree object? The DM must now delete it, no? Well, that’s not so onerous, is it? But what if there are multiple treants? Now the DM must place multiple tokens and delete multiple objects. Still not a problem? But now we enter combat, and the DM—spontaneously, in mid-combat—decides that the treant should use its animate trees ability to make two normal trees into treants—with selection of candidate trees being driven by tactical considerations, thus much less amenable to preparation. Suddenly the DM is spending non-trivial amounts of time messing around with tokens and objects, slowing down the pace of combat. Not good.)

Situations like this abound, once we think to look for them. A boulder might turn out to be a galeb duhr; a statue is actually an iron golem; an armoire is a mimic waiting to strike. This is to say nothing of magical illusions, mundane disguises, etc. Anything might turn out to be something else. And, of course, the simplest sort of scenario: an object (such as a statue), be it as mundane and naturally inert as you like, may simply be moved or destroyed!

It would be best if our map representation robustly supported this inherent changeability and indeterminacy by means of a flexible data model. As before, we do not have to model any of the things I’ve just described, on any given map for any given scenario. But we may very well wish to do so, without thereby exceeding the scope of BigVTT’s basic feature roadmap.

Disguised & hidden elements

This is really more of the same sort of thing as we’ve already mentioned, across the previous several categories, just made more explicit. Basically, we are talking about any variation of “it seems like one thing, but is a different thing”. This might include:

- creatures disguised as inanimate objects (or even as walls, floors, etc.)

- illusionary walls (or, more generally, parts of the environment that look like they are real things but actually aren’t)

- invisible walls (or statues or couches or anything)

- entire parts of the environment that look different from how they actually are (via something like the mirage arcana spell)

- secret doors

- concealed traps

But wait! What exactly is the difference—as far as VTT functionality goes—between “it’s one thing, but (due to disguises or magic or whatever) looks like some other thing”, and “it’s one thing, but then it transforms into another thing”? Don’t both of those things involve, at their core, a change of apparent state? A wall that can disappear and a wall that wasn’t there in the first place but only looked like it was—will we not represent them, on the map as displayed to the players, in essentially the same way?

This suggests that was we need to store and model is something as broad as “alternate states of elements within the map representation, and a way of switching between them”.

And, of course, it’s not just walls or other fixed elements that can change, be disguised, be hidden, or otherwise be other than what they at some other time appeared to be—it’s also creatures (see the next section), effects (the section after that), etc.

Mobile entities (tokens)

Unlike all of the elements we’ve discussed so far, which at least possibly might be represented by a simple, single-layered bitmap image (albeit with considerable sacrifices of flexibility and ability to represent various properties and aspects of the game world), there is no possibility whatever of having tokens be simply a part of that static format. The movability of tokens, and their separateness from the map, is inherent to even the most narrowly construed core of virtual tabletop functionality.

What kinds of things are tokens? Without (for now) thinking too deeply about it, let us give a possibly-incomplete extensional definition. Tokens can represent any of the following:

- player characters

- NPCs

- monsters

- creatures of any sort, really

- certain magical effects, constructs, or entities that behave like creatures in relevant ways (e.g. a spiritual weapon, a Mordenkainen’s sword, a Bigby’s grasping hand, etc.)

- illusionary effects that appear to be any of the above sorts of things (e.g. a major image of a dragon)

- vehicles?

- (Here we are referring to chariots, motorcycles, and the like; we probably wouldn’t represent a sailing ship, say, as a token… or would we?)

It might occur to us that one way to intensionally describe the category of “things that would be represented as tokens” might be “anything that might have hit points and/or status effects”. (We might then think to add “… or anything that appears to be such a thing”, to account for e.g. illusions.) However, that definition leads us to a curious place: what about a door (or a platform, or a ladder, or a statue, etc.)? Doors have hit points! Should a door be a token? As noted in the previous section (with the treant example), an object can become a creature very quickly (a treant can animate a tree; or a spellcaster can cast animate objects on a door or statue), or an apparent object can turn out to have been a creature all along. (Likewise, a creature can become an object, with even greater ease. The most common means of doing so is, of course, death.)

Is there, after all, any difference between tokens and stationary foreground objects? Or is the line between them so blurred as to be nonexistent?

On the other hand, it seems like it would be annoying and countinterintuitive to, e.g., drag-select a bunch of (tokens representing) goblins, only to find that you’ve also selected miscellaneous trees, rocks, tables, etc., even if we’ve already decided to represent the latter sorts of things as independent objects in our model (and not just parts of the map background image). Some differentiation is required, clearly.

In any case, how should we store and model tokens? As already noted, they are inherently distinct from the map. So we’ll have to store them separately, as the least. We’ll thus also need to store token position, state, and similar data, no matter what data format we are using for the map. What about internal structure? What is a token? If nothing else, it’s a graphical element of some sort, plus a non-graphical identifier. The rest, we can think about in more detail later.

Effects & phenomena

Here we are referring to things like a wall of fire, a magical gateway (e.g. one created by a gate spell), an area affected by an entangle spell, and other things of this nature. Such things are not exactly physical objects or parts of the terrain / architecture, and they’re not exactly creatures or similar autonomous entities, but they definitely exist in the game world and affect, and can be interacted with, the player characters as they move around the mapped area. Effects & phenomena share many functional qualities with the sorts of things typically represented by tokens, and likewise share many functional qualities with the sorts of things that we might classify as “stationary foreground objects” or the like. (Indeed, there is considerable overlap: a wall of fire is an effect, but what about a wall of ice—isn’t that pretty much your regular wall? But what about the “sheet of frigid air” left behind when a wall of ice is broken? How about a wall of stone? Clearly a regular wall, right? What about wall of force? It’s solid, but it doesn’t block sight! What about a wall of deadly chains…? And so on.) There’s also overlap with floor/terrain properties: what’s the difference between a part of the map that’s filled with mud, and the area of effect of a transmute rock to mud spell?

(Incidentally, what is the difference—as far as our model and representation goes—between the effect created when the PC wizard casts a wall of force in combat, and a dungeon which is constructed out of walls of force? Should there be any difference?)

We have already noted that effects may be mobile (e.g., a flaming sphere spell). But effects may also be linked to another entity (which is itself potentially mobile). A common type of such effects is illumination—that is, the light shed by a glowing object or creature (and if, e.g., a character is carrying a torch, we would generally model the character as casting light, rather than having a “torch” entity which casts light and is carried by the character). “Auras” of various sorts (e.g., a frost salamander’s cold aura) are another fairly common class of example.

For now, rather than spending time on thinking deeply about how to represent things like this, we will simply note that effects & phenomena are another example of reasons to consider a wide variety of types of map elements to constitute an essentially continuous region in the space of “what sorts of things can be found on or in the map representation of a VTT”, and are yet another reason to prefer maximal flexibility in our data storage and representation format.

Elevation shifts

Not different dungeon levels—that should be represented by separate maps (or, perhaps, disjoint regions of a single map, with an enforced lack of continuity between them—more on that later in a later post). No, here we are talking about something like, for instance, a raised platform or dais, in the middle of an otherwise flat dungeon room; or a sheer cliff face, cutting across part of an outdoor map; or two platforms, with a ramp between them; etc.

It is no trouble to depict such things visually, but need they be modeled in some way relevant to BigVTT’s functionality? Once again, it’s the distance measurement functionality which suggests that the answer be “yes” (essentially the same considerations apply as do to modeling different terrain/floor types). However, actually doing this may be quite difficult, and may require carefully constructed programmatic models of the specific movement-related game mechanics used in various TTRPG systems. It may be tempting to instead model elevation changes (that do not result in “dungeon level shifts”) via something akin to transparent walls. In any case, it’s clear that some means of representing such things is desirable, else we either forego support for maps with within-level elevation shifts, or else resign ourselves to our distance measurement tools giving mistaken output when used in parts of the map where such elevation shifts occur.

Fog, visibility, illumination, etc.

A detailed discussion of fog of war and related features (vision, illumination, exploration, etc.) must wait until a later post. For now it suffices to note that there are, in general, two approaches to this sort of thing: storing and modeling fog of war metadata entirely separately from the map itself, or inferring vision/fog from features of the map.

The latter obviously requires that we model the map in a more structured and complex way than, e.g., simply a single-layer bitmap image. We have, of course, already given many reasons why we might want to do that; to those we can now add the possibility of implementing more robust fog of war / vision behavior, without requiring any (or very much) additional work on the user’s (i.e., DM’s) part. Nevertheless, we may want to keep in reserve the option of storing an entirely independent fog layer (or layers), if doing so does not mean a significant amount of additional development effort.

The reason why we might wish to do this is that effort is conserved, because information is conserved; the data which defines fog / vision must come from somewhere, and if it comes from a structured map format then someone must have encoded that structure—and if that someone is the DM himself (as seems likely, given the near-total absence of existing RPG maps in vector formats), then it’s an open question whether it takes more work to create a map which has sufficient structure from which to infer fog / vision behavior, or to use a simple, single-layer map and add an independent fog / vision metadata map on top of it.

Whichever approach we choose, we will also want to model and store the current state of the fog of war and token vision (since the dynamic nature of the fog of war feature is its whole purpose). (This is one of several kinds of map state we might want to store, along with token position and status, etc.) It is plausible that such data will be stored separately from the map as such, but this is not strictly required. Finally, as with fog / vision itself, it is possible that the state of this map layer (or layers) can be inferred or constructed from other stored data, such as the action log (see below).

Map grid

Let’s start with the disclaimer that a grid is not, strictly speaking, necessary, in order to use a VTT for its intended purpose. (And many of the VTT apps listed in my last post correctly allow the grid to be disabled.) It is true that some TTRPG systems (e.g., D&D 4e) require the use of a grid, and many others (e.g., every other edition of D&D, to one degree or another) benefit from it; so there’s no question that we must support a grid (though we must never rely on its use).

Here, however, the key question is whether we need in any sense to store any grid-related data. The answer turns out to be “yes”—namely, grid scale and grid offset. That is, consider a map like this (we are using Roll20 for this example):

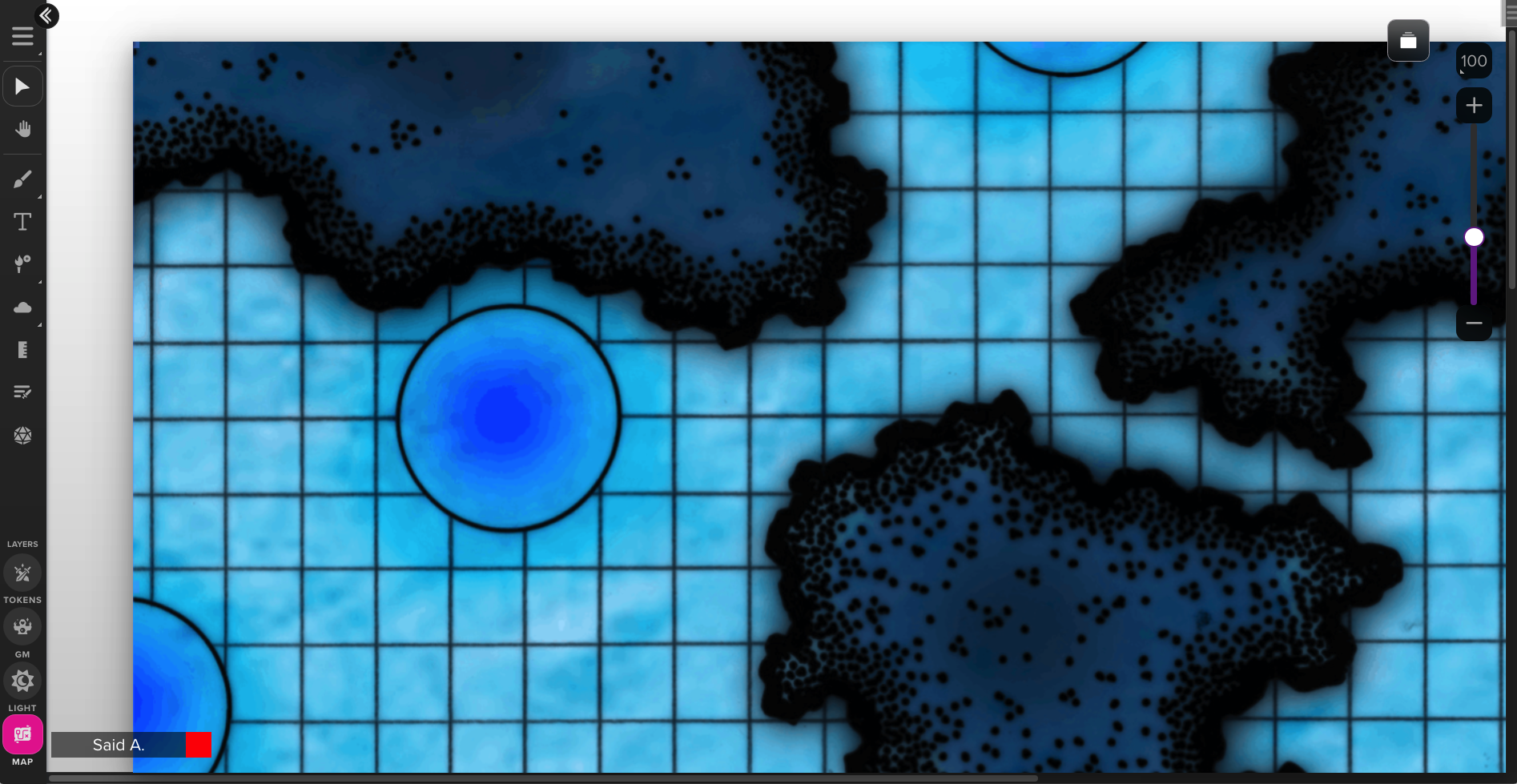

Figure 1. (Click to enlarge.) 100% zoom, 1:1 scale of map image pixels to VTT map pixels.

Now, how shall we apply a map grid to this dungeon map?

Note that the grid visible in figure 1 is a part of the imported map image, not a feature of the VTT. In other words, each grid square in the map image represents a 5-foot square of the mapped region—so the battle grid that our VTT imposes on this map must match that scale.

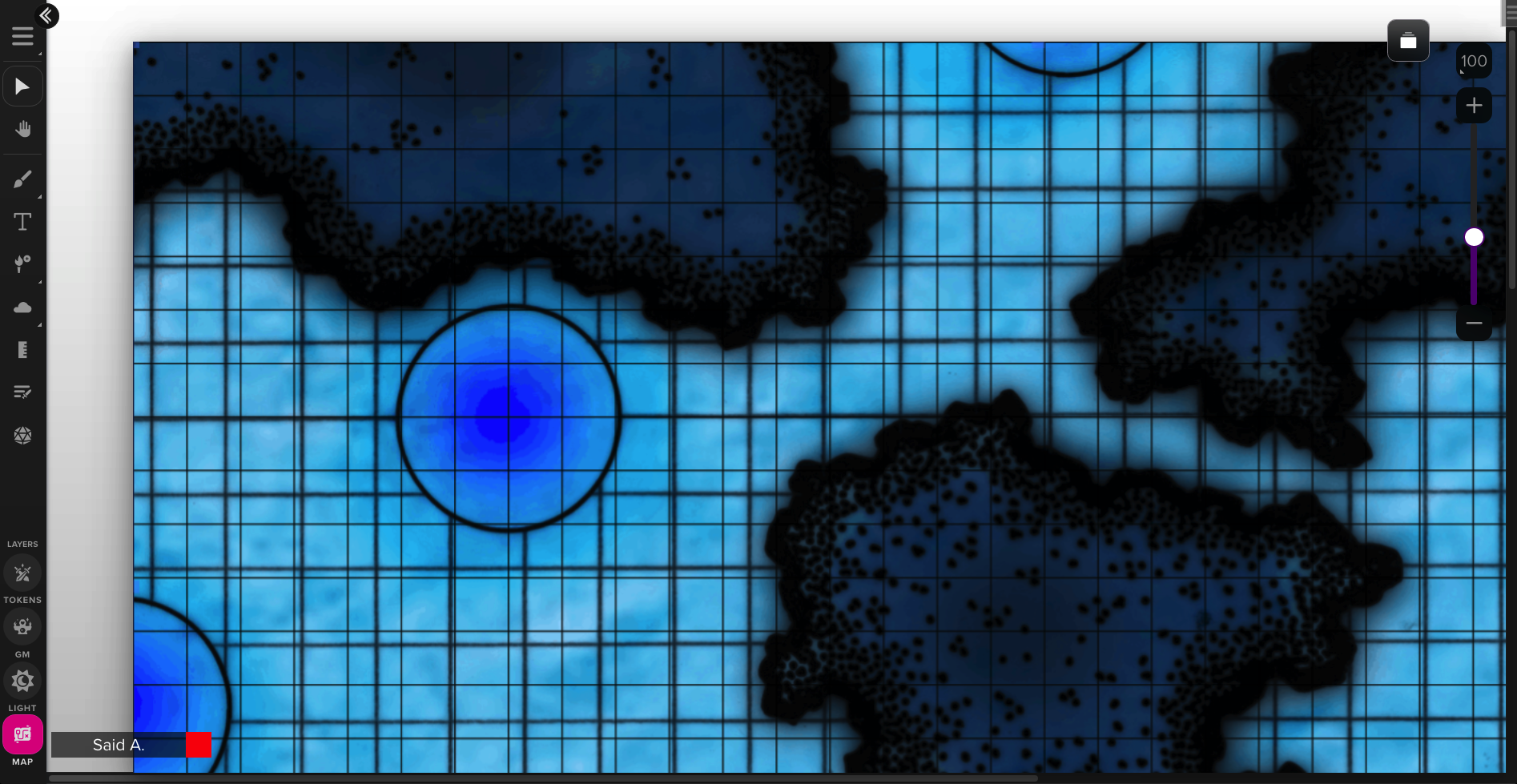

Suppose that we use 50-pixel grid squares. Applying such a grid to this map, we get this:

Figure 2. (Click to enlarge.)

Clearly not what we want; the VTT grid squares do not match the map’s own grid scale. To solve this, we can scale down the map image until the grid on the map matches the grid of the VTT, or we can make the VTT grid squares bigger. (These operations are obviously isomorphic; which is actually preferable depends on various factors which we need not discuss at this time, except to say that ideally, BigVTT should support both options; note that many existing VTTs only support one of these—and imperfectly, at that.)

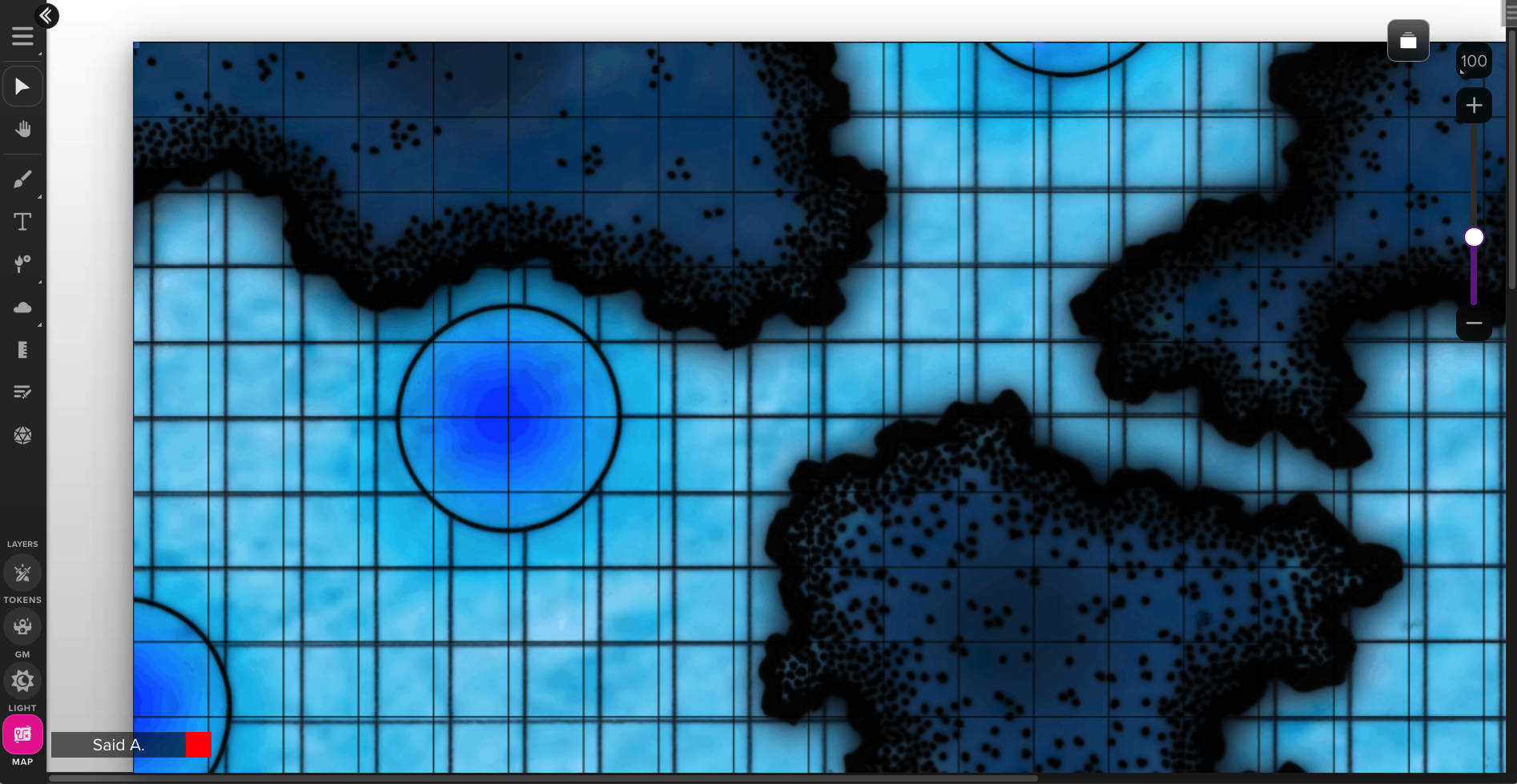

So, we go up to 70-pixel grid squares:

Figure 3. (Click to enlarge.)

Great, now the grid scales match. Unfortunately, the grid offset is wrong.

… now, what does that mean, exactly?

That is—we can see, visually, that something seems to be misaligned. But what exactly is the nature of the mismatch? After all, the grid has no reality in the game world. It’s not like the floor of this cavern is marked up with a 5-ft.-square rectilinear grid of lines! What difference does it make, actually, if we shift the grid 2.3 feet to the left? Those pixels in the map image which depict the cavern wall represent a physical wall in our fictional game world. Those pixels in the map image which form the grid represent… what? Nothing, right?

This is a perfect example of the limitations of using single-layer bitmap file formats for storing TTRPG maps. The depiction of the cavern walls and other in-game are data; the grid is metadata. But a PNG file collapses these things into a single, undifferentiated block of pixels.

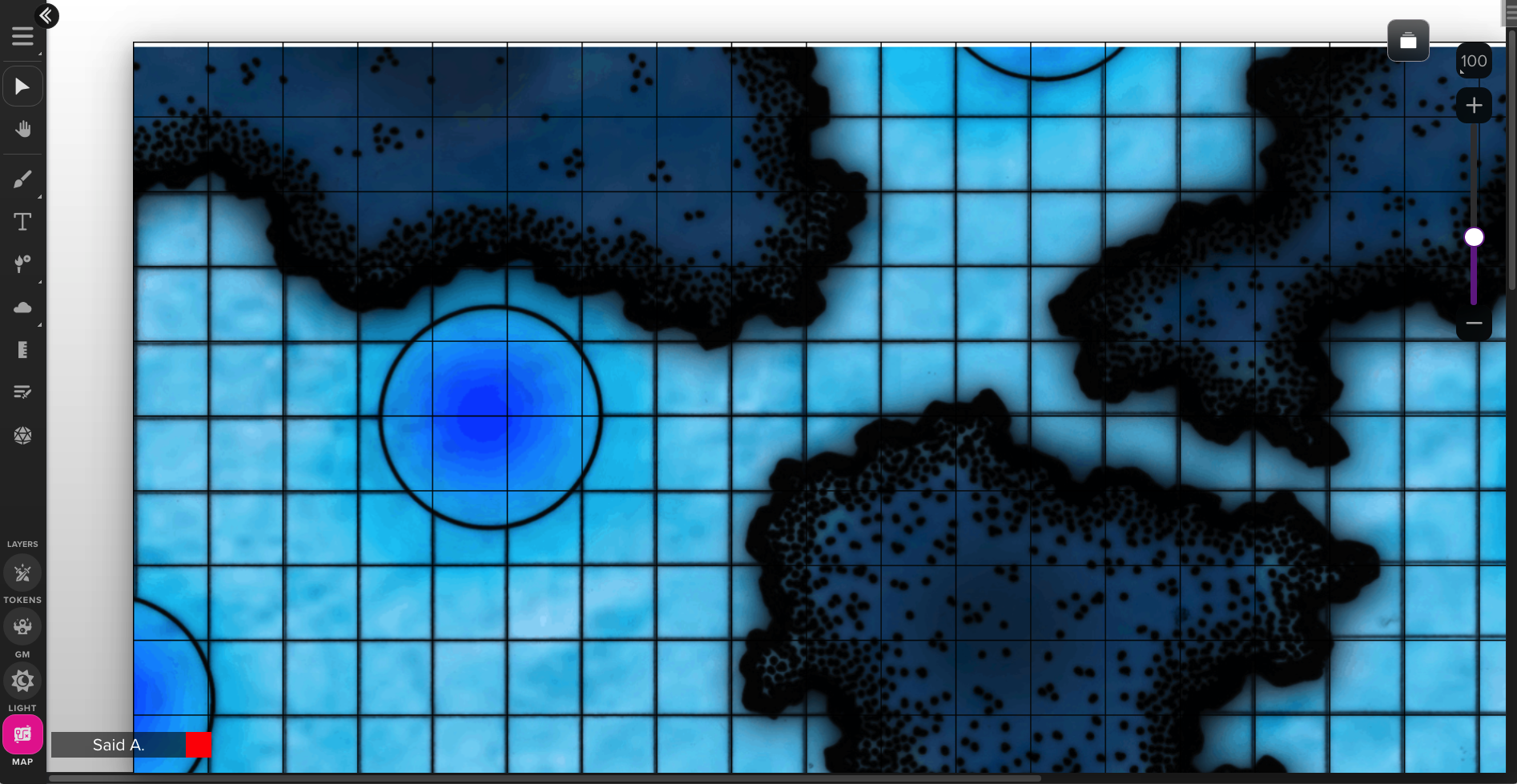

In any case, we might find it convenient to specify a grid offset in some cases—such as when we must use an existing map image in bitmap format which includes a grid. Thus:

Figure 4. (Click to enlarge.)

Much better; there is no longer a distracting mismatch between two grids.

One other reason why we’d need to specify a grid offset is if we are using the “snap to grid” feature (which any self-respecting VTT must have), which constrains things like token movement, measurement, shape drawing (e.g. for manual fog reveal purposes), etc., to align with, and occur in units corresponding to, the battle grid. To see why that’s important, consider this map:

Figure 5. (Click to enlarge.)

If we use snap-to-grid for token positioning on this map, with the grid aligned as pictured, then tokens will be snapped into a position which is embedded halfway into the walls of this 5-foot-wide dungeon corridor. What we want instead is for the map to be aligned such that snap-to-grid aligns the token precisely with the corridor:

Figure 6. (Click to enlarge.)

Much better.

Note that this time, the alignment of battle grid with the grid in the map image itself is not, strictly speaking, what we are talking about. It quite unsurprisingly so happens that the grid in the map image is aligned with the dungeon hallways and rooms, but that need not be so. Indeed, the very same map from which was taken the section shown in the above two screenshots, also contains bits like this:

Figure 7. (Click to enlarge.) The hallway pictured here is half the width of a 5-ft. grid square.

And this:

Figure 8. (Click to enlarge.)

How should snap-to-grid work in such cases? Probably the answer is “it shouldn’t”. (So there must be some way to disable it temporarily, for example, or some functionality along these lines.) Still, there is no reason to create problems where none need exist, nor to avoid useful features simply because they’re not always useful; so for those maps to which snap-to-grid may unproblematically apply, we must be able to specify a grid offset.

(Actually, we can go further still. There are other transformations that we may wish to apply to a map image in order to make it fit a battle grid more precisely, such as rotation, shear / skew, or distort. For that matter, we can even apply arbitrary transformation matrices to the map image. All of the data that defines such transforms must also be stored somehow.)

Annotations

This encompasses a wide variety of map-linked metadata, of the sort that does not directly affect any of the VTT’s core functionality (e.g., positioning, token movement, fog of war, vision, etc.), but which exists to provide useful information to the DM and/or players during play. Examples might include:

- dungeon room key labels (i.e., alphanumeric tags associated with each keyed location; see The Alexandrian on dungeon keys)

- dungeon room key content (that is, the text associated with the labels—why not include it with the map, and display it on the same screen?)

- secret information (e.g., “if any character steps on that part of the floor, they will be teleported to area 27”)

- Note that this overlaps to some extent with “disguised & hidden elements”; the key distinction, perhaps, is that by “secret information” we mean things that do not look like anything in the game world, but are only facts about other map elements

- map legend (i.e., guide to map symbols, or other hints for reading the map, displayed for easy reference)

- ephemeral region markers (e.g., “I’m going to throw a fireball right… there”)

- tags describing alterations performed by the characters (e.g., suppose that the PCs decide to start drawing crosses in chalk on the doors of dungeon rooms that they’ve explored)

In short, annotations may be textual, graphical, or some combination thereof; and they may be visible to the DM only or also to the players. In either case, they do not themselves correspond to any part of the game world (though they may describe things that do), and do not need to be modeled in any way. They do, however, need to be stored, for persistence across sessions.

Active / interactive elements

We are now getting into relatively exotic territory, but it’s worth considering (though only in passing, for now) this fairly broad category, which may include any of the following:

- animations and audio cues linked to map regions or elements

- “hyperlinks” from one map region to another (e.g., suppose that clicking on a stairway shifts the map view to a section of the map that represents whatever different dungeon level the stairway leads to)

- defined regions which restrict token movement

- map-linked triggers for action macros (these may cause map transformations to occur; tokens to appear, disappear, move, or change in some ways; etc.)

And so on; this short list barely scratches the surface of the possible. (We are getting dangerously close to re-inventing HyperCard with this sort of thing… an inevitable occupational hazard when building user-configurable dynamic multimedia systems.)

Action history

Until now, we have mostly looked at elements which define the map as it exists in some state (which may include various potential alternate states, or conditional alterations to the map state, etc., but all of these things are merely part of the totality of a single actual state). There are, in addition, many good reasons why we would also want to store a history of updates to the map state. One obvious such reason would be to implement an undo/redo feature (surely a necessity if we want BigVTT to work as a map creation/editing tool). There are several other motivations for maintaining an action / update history; we will discuss them at length in a later post.

Is all of this really necessary?

It does seem like quite a lot, doesn’t it? Is everything we’ve just listed really a mere extrapolation from the list of requirements outlined in the previous post?

I think the answer is “with a few minor exceptions, yes, it is”—but anyhow, it doesn’t matter. The point is not that we must implement all of the features and capabilities which we’ve listed in this post (much less that we must implement them right away). Rather, the reason for enumerating all of these things is to keep them in mind when making fundamental design and implementation decisions. Specifically, when choosing a data format (or formats!), we should prefer to choose such that our choice will make our lives easier when we do start implementing some of the features and functions we’ve been discussing, and avoid choosing such that our choice will make our lives harder than that regard.

What, then, are the options?

It’s crystal-clear that a simple bitmap image format won’t cut it. Indeed, it seems quite plausible that a simple bitmap image format won’t cut it even for just the map background image—never mind anything else. Any use we make of bitmap image data will have to be incorporated into a more powerful, more complex data format.

The question is: what will be that more complex data format? There are, basically, two alternatives, which can be summarized as “SVG” and “some sort of custom format”.

In fact, are these even alternatives, as such? Mightn’t we need both, after all? For instance, suppose we store the map, as such, as an SVG file (somehow), and the action log as a separate text file. Why not? Or: suppose that the map is an SVG file, but then it contains references to various bitmap images, which are each stored as separate external PNG files. No rule against that, is there?

Another way in which “alternatives” isn’t quite the right way to look at this has to do with the fact that when you’re dealing with text-based file formats, there is no sharp dividing line between almost anything and almost anything else. For instance: SVG files can contain comments. What is the difference between (a) an SVG file and a separate text file and some separate PNG files, and (b) an SVG file with some text in a comment and some base64-encoded PNG data in another comment? (Well, the latter will take up a bit more disk space, but that’s hardly fundamental!)

The nitty-gritty of just how the various parts of our data storage mechanism will fit together are, ultimately, implementation details. What’s clear, at this point, is that our approach should probably be something like “start with SVG, see how far we can push that, and branch out if need be”. It’s possible, of course, that we’ll hit a wall with that approach, and end up having to rethink the data format from scratch. But that does not seem likely enough to justify trying to design a custom data format right from the get-go.

So what does SVG get us?

Other than the advantages already listed, I mean?

Quite a lot, as it turns out.

The drawing tools just about write themselves

Recall two of our requirements: we want to have map drawing/editing tools, and we want to have fog of war support (which means the ability to reveal/conceal regions of the map). In both cases, we need to let the user draw shapes, and we need to render those shapes on the screen.

Well, the SVG format supports pretty much all the kinds of shapes that any vector drawing app could want: rectangles and ovals and polygons and lines and paths (Bezier curves)… these parts of the SVG spec effectively serve as guides for implementating the tools to draw these shapes: just create fairly straightforward mappings from user actions (clicks, pointer movements, etc.) to shape attribute values, and you’re good to go. Rendering is even more trivial: create a shape object, plug in the values, add it to the page. Easy!

Version control

SVG is a text-based format. That means that we can store our map data in a Git repository, and track changes to it, very easily.

(Is this something that anyone will want to do? On a small scale—which describes most users—probably not. But this sort of capability tends to open up options that don’t exist otherwise. For instance, collaboration on a large project, that might involve creating many BigVTT-compatible maps for some large dungeon complex, or a series of adventure paths, or a whole campaign, etc., will be greatly advantaged by the use of a version control system.)

Extensible metadata

This is a fancy term that means no more than “the format makes it easy to add support for any kind of thing”. As an XML-based file format, SVG makes it trivial to attach arbitrary amounts and kinds of information to any object, or to the file as a whole, without breaking anything, affecting any existing feature, etc.

Native support for many aspects of the design

Layers? Objects in an SVG file are naturally “layered” (the painter’s algorithm); objects can be grouped into uniquely identifiable groups; objects can be moved from layer to layer, or the layers rearranged; all of this is already a direct consequence of how the format works.

Object types? Tags and attributes. Media embedding? The <image> and <foreignObject> tags.

In short, we get many of the basics “for free”.

Composability

You can embed a bitmap image into an SVG. You can embed an SVG into another SVG. You can embed an SVG in a web page. You can embed a web page in an SVG.

As far as composability goes, if you’re working with 2D graphical data, SVG is hard to beat.

The DOM

Using SVG in a web app means having access to the Document Object Model API, which lets us manipulate all the objects that make up our map using a variety of powerful built-in functions. (“Select all character tokens”? That’s a one-liner. “Turn every wall transparent”? That’s a one-liner. “Make every goblin as big a storm giant”? Oh, you better believe that’s a one-liner.)

Compatibility

SVG is supported by every graphical browser and has been for years. There exist innumerable drawing/editing tools that can edit SVG files. SVG can be easily converted to and from a variety of other formats. (There’s now even a way to transform bitmap images into SVG files!)

What’s next?

Upcoming posts will deal with four major aspects of BigVTT’s design: affordances for working with large maps, the fog of war features, the action history feature, and the rendering system.

Recent Comments